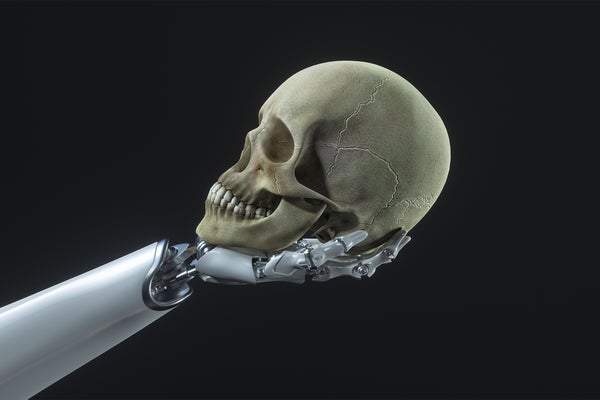

“The machines rose from the ashes of the nuclear fire.” Skulls crushed under robot tank treads. Laser flashes from floating shadowy crafts. Small human figures scurrying to escape.

These iconic images open The Terminator, the movie that perhaps like no other coalesced the sci-fi fears of the 1980s into a single (rather good) movie set in 2029, in Los Angeles, in the wake of nuclear war.

As we rush headlong into the AI future, with arms races renewed once again, and too little thought being given to safety concerns, are we headed for something like Hollywood’s hellscape where machines have killed or subjugated almost the entire human race?

On supporting science journalism

If you're enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

Well, yes, it’s happening now. Russia and Ukraine have been exchanging drone attacks for two years, with an increasing number of these drones being autonomous. Israel has allegedly been using AI targeting programs for thousands of infrastructure and human targets in Gaza, in many cases with little to no human supervision.

Closer to home, the U.S. Department of Defense has been loud and public about its plans to incorporate AI into many aspects of its weapons systems. Deputy Defense Secretary Kathleen Hicks said while unveiling a new AI strategy at the Pentagon in late 2023: “As we’ve focused on integrating AI into our operations responsibly and at speed, our main reason for doing so has been straightforward: because it improves our decision advantage.”

The trend toward incorporating AI into weapons systems is mostly due to its speed in integrating information to reach decisions; AI is already thousands of times faster than humans in many tasks, and will soon be millions, then billions of times faster. This “accelerating decision advantage” logic, the subtitle of the new AI strategy document, can apply across all kinds of weapons systems, including nuclear weapons.

Experts have warned about such incorporating of advanced AI systems into surveillance and weapons launch systems, including in a December book by James Johnson, AI and the Bomb: Nuclear Strategy and Risk in the Digital Age, which argues that we are on track for a world where AI is incorporated into nuclear weapons systems, the most dangerous weapons ever developed. Former senator Sam Nunn of Georgia, a long-respected voice in defense policy, and many others have also warned about the potential catastrophe(s) that could occur if AI is used this way.

One of the seven principles “for global nuclear order,” set forth in a document signed by Nunn and others associated with the Nuclear Threat Initiative he co-founded, is: “Creating robust and accepted methods to increase decision time for leaders, especially during heightened tensions and extreme situations when leaders fear their nations may be under threat of attack, could be a common conceptual goal that links both near- and long-term steps for managing instability and working toward mutual security.” It’s immediately clear that this principle goes in the opposite direction of the current put-AI-into-everything trend. Indeed, the next principle that Nunn and his co-authors offer recognizes this inherent conflict: “Artificial intelligence … can dangerously compress decision time and complicate the safe management of highly lethal weapons.”

I interviewed military and AI experts about these issues and while there was no consensus about the specific nature of the threat or its solution(s), there was a shared sense of very serious potential harm from AI in the use of nuclear weapons.

In February the State Department released a major report on AI safety issues from Gladstone AI, a company founded by national security experts Edouard and Jeremie Harris. I reached out to them about what I saw as some omissions in their report. While Edouard did not view AI being incorporated into nuclear weapons surveillance and launch decisions as a near-term threat (their report focused more on conventional weapons systems), he stated at the end of our discussion that fears of being scooped or duped by enemies using AI is a “meaningful risk factor” in potential military escalation. He suggested that “governments are less likely to give AI control over nuclear weapons … than they are to give AI control over conventional weapons.”

But in our dialogue, he recognized that fear of being duped by AI disinformation “is a possible reason states could be motivated to hand over control of their nuclear arsenals to AI systems: those AI systems could be more competent than humans at making judgment calls in the face of adversarial AI-generated misinformation.” This is a scenario sketched by Johnson in AI and the Bomb.

John von Neumann, the brilliant Hungarian-American mathematician who developed game theory into a branch of mathematics, was an advocate of nuclear first strikes as a type of “preventive war.” For him, game theory and good sense strongly suggested that the U.S. strike the Soviet Union hard before they developed a sufficient nuclear arsenal that would make any such strike a mutual suicide. He remarked in 1950: “If you say why not bomb them tomorrow, I say why not today? If you say today at five o’clock, I say why not one o’clock?”

Despite this luminary’s hawkish recommendation, the U.S. did not in fact strike the Soviets, with either nuclear or conventional bombs. But will AI incorporated into conventional and nuclear weapons systems follow a hawkish game theory approach, à la von Neumann? Or will the programs adopt more empathetic and humane decision-making guidelines?

We surely can’t know today, and the defense policy makers and their AI programmers won’t know either, because the workings of today’s large language model (LLM) AIs are famously a “black box.” That means we simply don’t know how these machines make decisions. While military-focused AI will surely be trained and given guidelines, there is no way to know how AI will respond in any particular context until after the fact.

And that is the truly profound and chilling risk of the headlong rush to incorporate AI into our weapons systems.

Some argue that nuclear weapons systems are different than conventional weapons systems, and that military decision-makers will resist the urge to incorporate AI into these systems because the stakes are so much higher and because there may not be any significant advantages in dramatically accelerating launch decisions.

The ascendance of tactical nuclear weapons, much smaller and designed for potential battlefield use, significantly blurs these lines. Russia’s saber-rattling on potential use of tactical nuclear weapons in Ukraine makes this distinction all too real.

We need robust laws now to withstand eliminating humans from nuclear decision-making. The end goal is no “nuclear ashes” and no Skynet. Ever.

This is an opinion and analysis article, and the views expressed by the author or authors are not necessarily those of Scientific American.