One afternoon in early 1994 a couple of astronomers sitting in an air-conditioned computer room at an observatory headquarters in the coastal town of La Serena, Chile, got to talking. Nicholas Suntzeff, an associate astronomer at the Cerro Tololo Inter-American Observatory, and Brian Schmidt, who had recently completed his doctoral thesis at the Center for Astrophysics | Harvard & Smithsonian, were specialists in supernovae—exploding stars. Suntzeff and Schmidt decided that the time had finally come to use their expertise to tackle one of the fundamental questions in cosmology: What is the fate of the universe?

Specifically, in a universe full of matter that is gravitationally attracting all other matter, logic dictates that the expansion of space—which began at the big bang and has continued ever since—would be slowing. But by how much? Just enough that the expansion will eventually come to an eternal standstill? Or so much that the expansion will eventually reverse itself in a kind of about-face big bang?

They grabbed the nearest blue-and-gray sheet of IBM printout paper, flipped it over and began scribbling a plan: the telescopes to secure, the peers to recruit, the responsibilities to delegate.

On supporting science journalism

If you're enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

Meanwhile some 9,600 kilometers up the Pacific Coast, a collaboration at Lawrence Berkeley National Laboratory in California, operating under the leadership of physicist Saul Perlmutter, was already pursuing the same goal, using the same supernova approach and relying on the same underlying logic. Suntzeff and Schmidt knew about Perlmutter’s Supernova Cosmology Project (SCP). But they also knew that the SCP team consisted primarily of physicists who, like Perlmutter himself, were learning astronomy on the fly. Surely, Schmidt and Suntzeff reassured each other, a team of actual astronomers could catch up.

And their team did, just in time. In 1998 the rival collaborations independently reached the same conclusion as to how much the expansion of the universe is slowing down: it’s not. It’s speeding up.

Last year marked the 25th anniversary of the discovery of evidence for “dark energy”—a moniker for whatever is driving the acceleration that even then meant next to nothing yet encompassed nearly everything. The coinage was almost a joke, and the joke was on us. If dark energy were real, it would constitute two thirds of all the mass and energy in the universe—that is, two thirds of what people had always assumed, from the dawn of civilization onward, to be the universe in its entirety. Yet what that two thirds of the universe was remained a mystery.

A quarter of a century later that summary still applies. Which is not to suggest, however, that science has made no progress. Over the decades observers have gathered ever more convincing evidence of dark energy’s existence, and this effort continues to drive a significant part of observational cosmology while inspiring ever more ingenious methods to, if not detect, at least define it. But right from the start—in the first months of 1998—theorists recognized that dark energy presents an existential problem of more immediate urgency than the fate of the universe: the future of physics.

The mystery of why a universe full of matter gravitationally attracting all other matter hasn’t yet collapsed on itself has haunted astronomy at least since Isaac Newton’s introduction of a universal law of gravitation. In 1693, only six years after the publication of his Principia, Newton acknowledged to an inquiring cleric that positing a universe in perpetual equilibrium is akin to making “not one Needle only, but an infinite number of them (so many as there are particles in an infinite Space) stand accurately poised upon their Points. Yet I grant it possible,” he immediately added, “at least by a divine Power.”

“It was a great missed opportunity for theoretical physics,” the late Stephen Hawking wrote in a 1999 introduction to a new translation of Principia. “Newton could have predicted the expansion of the universe.”

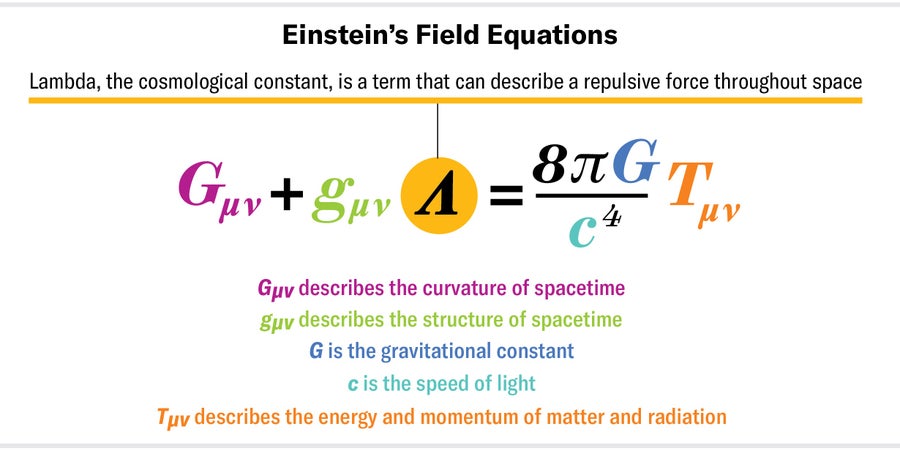

So, too, Einstein. When, in 1917, he applied his equations for general relativity to cosmology, he confronted the same problem as Newton. Unlike Newton, though, Einstein added to the equation not a divine power but the Greek symbol lambda (Λ), an arbitrary mathematical shorthand for whatever was keeping the universe in perfect balance.

Jen Christiansen

The following decade astronomer Edwin Hubble seemingly rendered lambda superfluous through his twin discoveries that other “island universes,” or galaxies, exist beyond our own Milky Way and that on the whole those galaxies appear to be receding from us in a fairly straightforward manner: the farther, the faster—as if, perhaps, the universe had emerged from a single explosive event. The 1964 discovery of evidence supporting the big bang theory immediately elevated cosmology from metaphysics to hard science. Only six years later, in an essay in Physics Today that set the agenda for a generation, astronomer (and onetime Hubble protégé) Allan Sandage defined the science of big bang cosmology as “the search for two numbers.” One number was “the rate of expansion” now. The second was the “deceleration in the expansion” over time.

Decades would pass before the first real investigations into the second number got underway, but it was no coincidence that two collaborations more or less simultaneously started work on it at that point. Only then had advances in technology and theory made the search for the deceleration parameter feasible.

In the late 1980s and early 1990s the means by which astronomers gather light was making the transition from analog to digital—from photographic plates, which could collect about 5 percent of the photons that hit them, to charge-coupled devices, which have a photon-collection rate upward of 80 percent. The greater a telescope’s light-gathering capacity, the deeper its view across the universe—and deeper and deeper views across space and (because the speed of light is finite) time are what a search for the expansion rate of the universe requires.

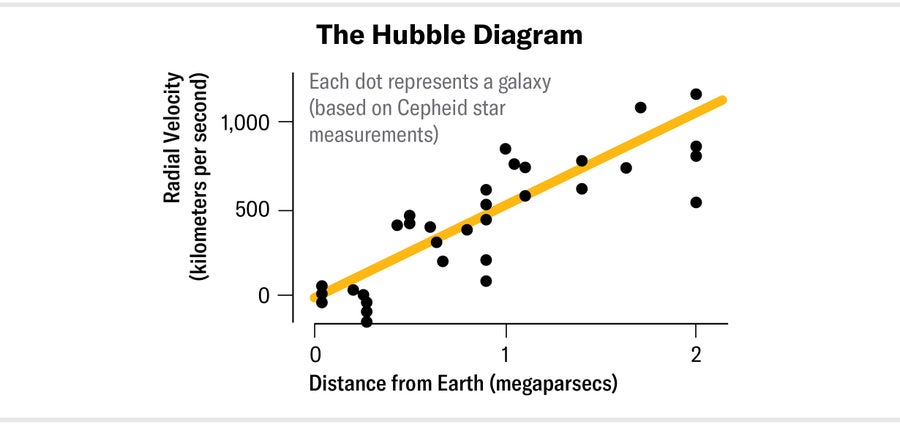

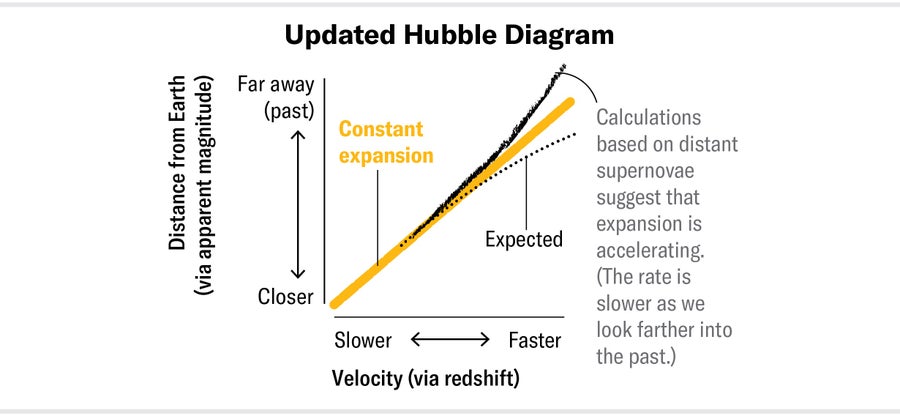

The Hubble diagram, as cosmologists call the graph Hubble used in determining that the universe is expanding, plots two values: the velocities with which galaxies are apparently moving away from us on one axis and the distances of the galaxies from us on the other.

Jen Christiansen

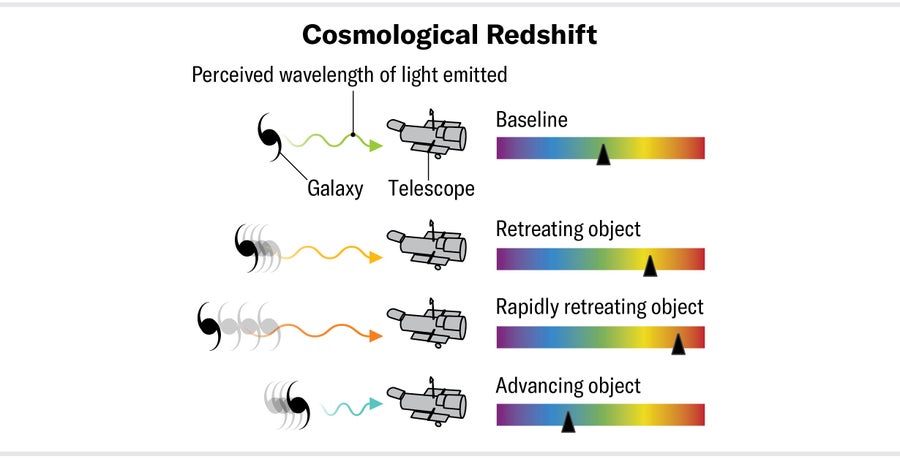

Astronomers can determine galaxies’ velocity—the rate at which the stretching of space is carrying them away from us—by measuring how much their light has shifted toward the red end of the visible portion of the electromagnetic spectrum (their “redshift”).

Jen Christiansen

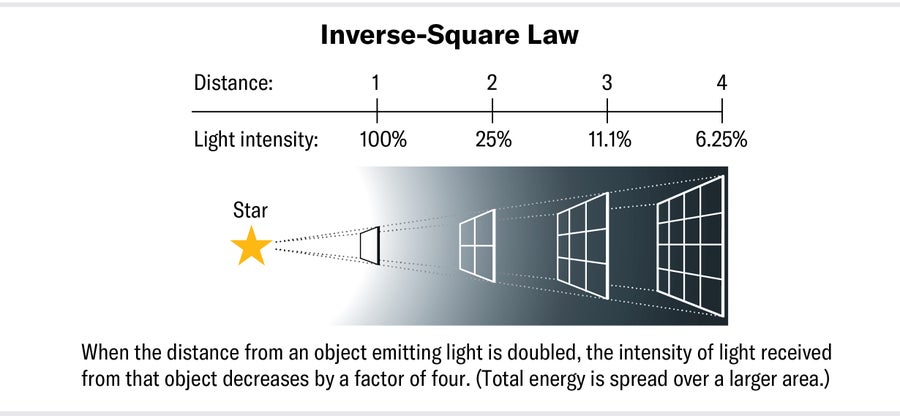

Determining their distance from us, however, is trickier. It requires a “standard candle”—a class of objects whose light output doesn’t change. A 100-watt lightbulb, for instance, is a standard candle. If you know that its absolute luminosity is 100 watts, then you can apply the inverse-square law to its apparent luminosity—how bright it looks to you at your current distance from it—to calculate how far away it actually is.

Jen Christiansen

The standard candle that Hubble used in plotting his diagram was a Cepheid variable, a star that brightens and dims at regular intervals. But Cepheid variables are difficult to detect at distances greater than 100 million light-years. Astronomers trying to measure the rate of expansion over the history of the universe would need a standard candle they could observe from billions of light-years away—the kinds of distances that charge-coupled device detectors, with their superior photon-collecting power, could probe.

A candidate for a standard candle emerged in the late 1980s: a type Ia supernova, the explosion of a white dwarf when it accretes too much matter from a companion star. The logic seemed reasonable: if the cause of an explosion is always the same, then so should be the effect—the explosion’s absolute luminosity. Yet further investigations determined that the effect was not uniform; both the apparent brightness and the length of time over which the visibility of the “new star” faded differed from supernova to supernova.

In 1992, however, Mark Phillips, another astronomer at the Cerro Tololo Inter-American Observatory (and a future member of Suntzeff and Schmidt’s team), recognized a correlation between a supernova’s absolute luminosity and the trajectory of its apparent brightness from initial flare through diminution: bright supernovae decline gradually, whereas dim ones decline abruptly. So type Ia supernovae weren’t standard candles, but maybe they were standardizable.

For several years Perlmutter’s SCP collaboration had been banking on type Ia supernovae being standard candles. They had to become standardizable, however, before Schmidt and Suntzeff—as well as their eventual recruits to what they called the High-z collaboration (z being astronomical shorthand for redshift)—could feel comfortable committing their careers to measuring the deceleration parameter.

Hubble’s original diagram had indicated a straight-line correlation of velocity and distance (“indicated” because his error bars wouldn’t survive peer review today). The two teams in the 1990s chose to plot redshift (velocity) on the x axis and apparent magnitude (distance) on the y axis. Assuming that the expansion was in fact decelerating, at some point that line would have to deviate from its 45-degree beeline rigidity, bending downward to indicate that distant objects were brighter and therefore nearer than one might otherwise expect.

From 1994 to 1997 the two groups used the major telescopes on Earth and, crucially, the Hubble Telescope in space to collect data on dozens of supernovae that allowed them to extend the Hubble diagram farther and farther. By the first week of 1998 they both had found evidence that the line indeed diverged from 45 degrees. But instead of curving down, the line was curving up, indicating that the supernovae were dimmer than they expected and that the expansion therefore wasn’t decelerating but accelerating—a conclusion as counterintuitive and, in its own way, revolutionary as Earth not being at the center of the universe.

Jen Christiansen

Yet the astrophysics community accepted it with alacrity. By May, only five months after the discovery, Fermilab had convened a conference to discuss the results. In a straw poll at the end of the conference, two thirds of the attendees—approximately 40 out of 60—voted that they were willing to accept the evidence and consider the existence of “dark energy” (a term invented that year by University of Chicago theoretical cosmologist Michael Turner in a nod to dark matter). Einstein’s lambda, it seemed, was back.

Some of the factors leading to the swift consensus were sociological. Two teams had arrived at the same result independently, that result was the opposite of what they expected, they had used mostly different data (separate sets of supernovae), and everyone in the community recognized the intensity of competition between the two teams. “Their highest aspiration,” Turner says, “was to get a different answer from the other group.”

But one factor at least equally persuasive in consolidating consensus was scientific: the result answered some major questions in cosmology. How could a universe be younger than its oldest stars? How did a universe full of large-scale structures, such as superclusters of galaxies, mature so early as to reach the cosmological equivalent of puberty while it was still a toddler?

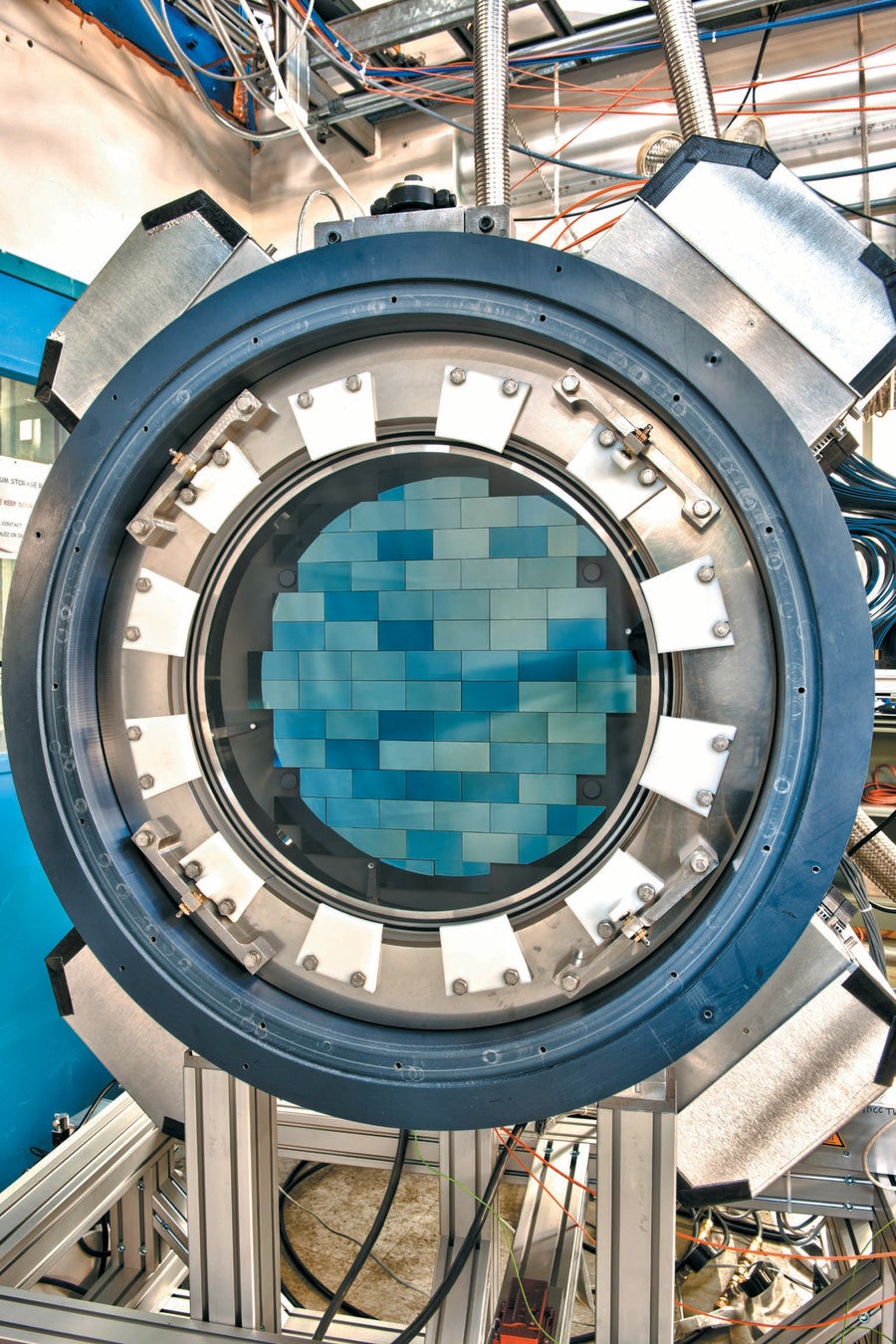

The Dark Energy Survey camera imager uses 74 charge-coupled devices to absorb light from hundreds of millions of galaxies to study the history of the universe’s expansion. The camera is installed on the Víctor M. Blanco Telescope at the Cerro Tololo Inter-American Observatory in Chile.

Reidar Hahn/Fermi National Accelerator Laboratory

Problems solved! An expansion that is speeding up now implies an expansion that was growing less quickly in the past; therefore, more time has passed since the big bang than cosmologists had previously assumed. The universe is older than scientists had thought: that toddler was a teenager after all.

But maybe the most compelling reason scientists were willing to accept the existence of dark energy was that it made the universe add up. For years cosmologists had been wondering why the density of the universe seemed so low. According to the prevailing cosmological model at the time (and today), the universe underwent an “inflation” that started about 10−36 second after the big bang (that is, at the fraction of a second that begins with a decimal point and ends 35 zeros and a 1 later) and finished, give or take, 10−33 second after the big bang. In the interimthe universe increased its size by a factor of 1026.

Inflation thereby would have “smoothed out” space so that the universe would look roughly the same in all directions, as it does for us, no matter where you are in it. In scientific terms, the universe should be flat. And a flat universe dictates that the ratio between its actual mass-energy density and the density necessary to keep it from collapsing should be 1.

Before 1998, observations had indicated that the composition of the universe was nowhere near this critical density. It was maybe a third of the way there. Some of it would be in the form of baryons, meaning protons and neutrons—the stuff of you, me and our laptops, as well as of planets, galaxies and everything else accessible to telescopes. But most of it would be in the form of dark matter, a component of the universe that is not accessible to telescopes in any part of the electromagnetic spectrum but isdetectable, as astronomers had understood since the 1970s, indirectly, such as through gravitational effects on the rotation rates of galaxies. Dark energy would complete that equation: its contribution to the mass-energy density would indeed be in the two-thirds range, just enough to reach critical density.

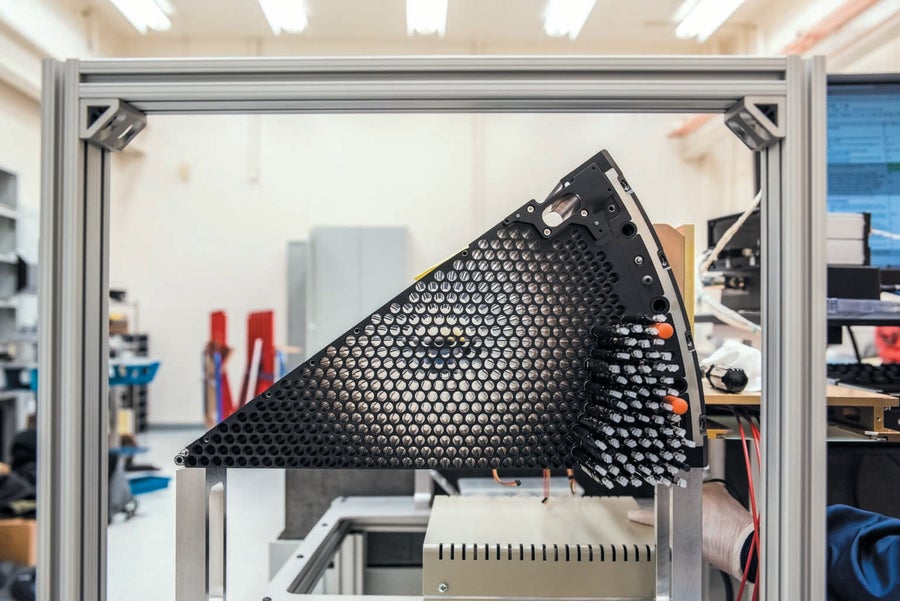

The focal plane of the DESI camera is made of 10 pie-slice-shaped wedges. Each piece holds 500 robotic positioners that can fix on individual galaxies to measure their light.

Marilyn Sargent/©2017 The Regents of the University of California; Lawrence Berkeley National Laboratory

Still, sociological influences and professional preferences aren’t part of the scientific method. (Well, they are, but that’s a separate discussion.)Where, astronomers needed to know, was the empirical evidence? Everywhere, it turned out.

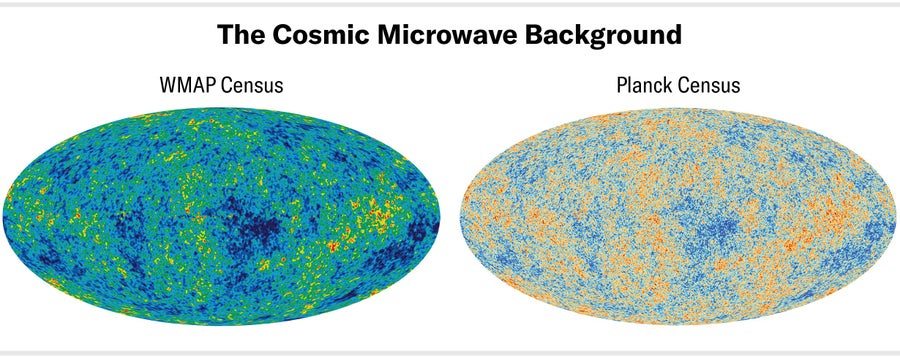

One way to calculate the constitution of the universe is by studying the cosmic microwave background (CMB), the phenomenon discovered in 1964 that transformed cosmology into a science. The CMB is all-sky relic radiation dating to when the universe was only 379,000 years old, when atoms and light were emerging from the primordial plasma and going their separate ways. The CMB’s bath of warm reds and cool blues represents the temperature variations that are the matter-and-energy equivalent of the universe’s DNA. Take that picture, then compare it with simulations of millions of universes, each with its own amounts of baryonic matter, dark matter and dark energy. Hypothetical universes with no regular matter or dark matter and 100 percent dark energy, or with 100 percent regular matter and no matter or dark energy, or with any combination in between will all produce unique color patterns.

The Wilkinson Microwave Anisotropy Probe (WMAP), which launched in 2001 and delivered data from 2003 to 2012, provided one such census. Planck, an even more precise space observatory, began collecting its own CMB data in 2009 and released its final results in 2018, corroborating WMAP’s findings: the universe is 4.9 percent the stuff of us, 26.6 percent dark matter and 68.5 percent dark energy.

NASA/WMAP Science Team (WMAP CMB); ESA and the Planck Collaboration (Planck CMB)

Yet for all of dark energy’s standard-model-of-cosmology-salvaging triumphs, a thuddingly obvious question has bothered theorists from the beginning: What is it? Dark energy does help the universe add up but only on the macroscale—the one that falls under the jurisdiction of general relativity. On the microscale, though, it makes no sense.

According to quantum physics, space isn’t empty. It’s a phantasmagoria of particles popping into and out of existence. Each of those particles contains energy, and scientists’ best guess is that this energy accounts for dark energy. It’s a seemingly neat explanation except that quantum physics predicts a density value a lot larger than the two thirds astronomers initially suggested—10120 larger. As the joke goes, even for cosmology, that’s a big margin of error.

Right at the start, in the winter of 1998, theorists got to work on shrinking that gap. Then they got to more work. They eventually got to so much work that the interplay between observers and theorists threatened to consume the community. Or at least so argued theorist Simon White in a controversial 2007 essay in Reports on Progress in Physics entitled “Why Dark Energy Is Bad for Astronomy.”

To find out what dark energy is, theorists need to know how it behaves. For instance, does it change over space and time?

The observers weren’t shy about expressing their frustration. At one point during this period of scientific disequilibrium, Adam Riess, lead author on the High-z discovery paper (and the team member who determined mathematically that without the addition of lambda—dark energy—the supernovae data indicated a universe with negative matter), dutifully checked new physics papers every day but, he says, found most of the theories to be “pretty kooky.”

Perlmutter began his public talks with a PowerPoint illustration of papers offering “explanations” of dark energy piling up into the dozens. Schmidt, in his conference presentations, included a slide that simply listed the titles of 47 theories he’d culled from the 2,500 then available in the recent literature, letting not just the quantity but the names speak for themselves: “five-dimensional Ricci flat bouncing cosmology,” “diatomic ghost condensate dark energy,” “pseudo-Nambo-Goldstone boson quintessence.”

“We’re desperate for your help,” Schmidt told one audience of theorists in early 2007. “You tell us [observers] what you need; we’ll go out and get it for you.”

Since then, astronomers’ frustration has turned into an attitude verging on indifference. Today Suntzeff (who eventually ceded leadership of the High-z team to Schmidt for personal reasons; he’s now a distinguished professor at the Mitchell Institute for Fundamental Physics & Astronomy in College Station, Tex.) says he barely glances at the daily outpouring of online papers. Richard Ellis, an astronomer on the SCP discovery team, says that “there are endless theories of what dark energy might be, but I tend not to give them much credence.” To find out what dark energy is, theorists need to know how it behaves. For instance, does it change over space and time? “We really need more precise observations to make progress,” Ellis adds.

More precise observations are what they’ll be getting.

Type Ia surveys continue to fill the Hubble diagram with more and more data points, and those data points are squeezing within more and more compact error bars. Such uniformity might be more gratifying if theory could explain the observations. Instead cosmologists find themselves having to go back and really make sure. The trustworthiness of the seeming uniformity depends on the reliability of the underlying schematics—the assumptions that drove the observations in the first place and that continue to guide how astronomers try to measure supernova distances.

“In my opinion, the ‘stock value’ of this method has declined a little over the years,” says Ellis, now an astronomy professor at University College London. One problem he cites is that “it is almost certain that there is more than one physical mechanism that causes a white dwarf in a binary system to explode.” And differing mechanisms might mean data that are, contrary to Phillips’s 1993 breakthrough, nonstandardizable.

Another problem is that analyses of the chemical components of supernovae have shown that older exploding stars contain lighter elements than more recent specimens—an observation consistent with the theory that succeeding generations of supernovae generate heavier and heavier elements. “It’s logical, therefore, that less evolved [older] material arriving on a white dwarf in the past may change the nature of the explosion,” Ellis says. Even so, “astronomers are still very keen to use supernovae.”

For instance, the Nearby Supernova Factory project, an offshoot of the SCP, is using a technique its team members call “twins embedding.” Rather than treating all type Ia supernovae as uniform, like a species, they examine the light properties of individual specimens whose brightness in different wavelengths follows almost exactly the same pattern over time. Once they find matching “twins,” they try to standardize from those data.

In the next two years two new facilities in Chile are expected to see first light and begin to undertake their own surveys of thousands of southern-sky supernovae. The Vera C. Rubin Observatory will locate the objects, and the 4-meter Multi-Object Spectroscopic Telescope will identify their chemical components, helping to clarify how supernovae with more heavy elements might explode differently.

As for space telescopes, researchers continue to mine supernovae in the Hubble archives, and Riess predicts that the James Webb Space Telescope (JWST) “will eventually turn its attention” to high-redshift supernovae once the telescope has addressed more of its primary goals. The community of supernova specialists is also anticipating the Nancy Grace Roman Space Telescope, a successor to JWST that is due for launch by mid-2027.

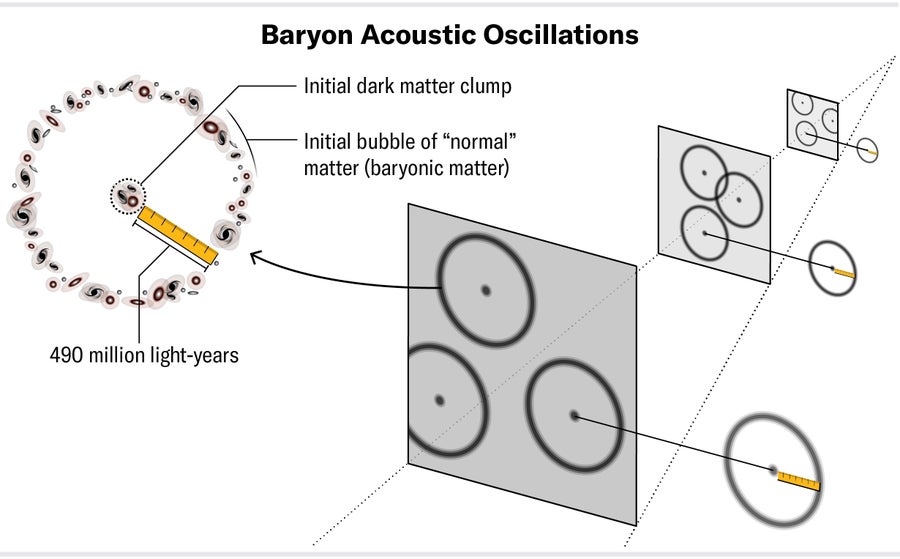

Surveying supernovae, however, is not the only way to measure the nature of dark energy. One alternative is to study baryon acoustic oscillations (BAOs)—soundlike waves that formed when baryon particles bounced off one another in the hot and chaotic early universe. When the universe cooled enough for atoms to coalesce, these waves froze—and they are still visible in the CMB. Similar to the way supernovae serve as standard candles, providing a distance scale stretching from our eyeballs across the universe, BAOs provide a standard ruler—a length scale for lateral separations across the sky. Scientists can measure the distances between densities of oscillations in the CMB, then trace the growth of those distances over space and time as those densities gather into clusters of galaxies. Ellis, an expert on BAO cosmology, calls it “probably the cleanest way to trace the expansion history of the universe.”

Jen Christiansen

Astronomers are awaiting the results from two major BAO surveys that should allow them to reconstruct cosmic evolution at ever earlier eras across the universe. The Dark Energy Spectroscopic Instrument (DESI) on the robotic Nicholas U. Mayall Telescope at Kitt Peak National Observatory in Arizona is collecting optical spectra (light broken up into its constituent wavelengths) for about 35 million galaxies, quasars and stars, from which astronomers will be able to construct a 3D map extending from the nearby objects back to a time when the universe was about a quarter of its present age. The first data, released in June 2023, contained nearly two million objects that researchers are now studying.

In 2025 the Prime Focus Spectrograph—an instrument on the 8.2-meter Subaru Telescope on Mauna Kea, Hawaii—will begin following up on DESI results but at even greater distances, from which the collaboration (Ellis is the co-principal investigator) will complete its own 3D map. And the European Space Agency’s Euclid spacecraft, which launched on July 1, 2023, will contribute its own survey of galaxy evolution to the BAO catalog, but it will also be employing the second nonsupernovae method for measuring the nature of dark energy: weak gravitational lensing.

This relatively new approach exploits a general relativistic effect. Sufficiently massive objects (such as galaxies or galaxy clusters) can serve as magnifying glasses for far more distant objects because of the way mass bends the path of light. Astronomers can then sort the growth of galactic clustering strength over time to track the competition between the gravitational attraction of matter and the repulsive effect of dark energy. Euclid’s data should be available within the next two or three years.

Since the discovery of acceleration, Perlmutter says, cosmologists have been hoping for an experiment that would provide “20 times more precision,” and “we’re now just finally having this possibility in the upcoming five years of seeing what happens when we get to that level.”

Twenty-five years ago the journal Science crowned dark energy 1998’s “Breakthrough of the Year.” Since then, the two pioneering teams and their leaders have racked up numerous awards, culminating in the 2011 Nobel Prize in Physics for Perlmutter (now a professor of physics at the University of California, Berkeley, and a senior scientist at Berkeley Lab), Riess (a distinguished professor at Johns Hopkins University and the Space Telescope Science Institute), and Schmidt (former vice-chancellor of the Australian National University). Dark energy long ago became an essential component of the standard cosmological model, along with baryonic matter, dark matter and inflation.

And yet ... as always in science, the possibility exists that some fundamental assumption is wrong—for instance, as some theorists posit, we might have an incorrect understanding of gravity. Such an error would skew the data, in which case the BAO measurements and Euclid’s weak gravitational lensing results will diverge, and cosmologists will need to rethink their givens.

From a scientific perspective, this outcome wouldn’t be the worst thing. “What got physicists into physics usually is not the desire to understand what we already know,” Perlmutter told me years ago, “but the desire to catch the universe in the act of doing really bizarre things. We love the fact that our ordinary intuitions about the world can be fooled.”

“I’m very glad I said that,” he says now when I remind him of that quote, “because that does feel so much like what I see all around me.” Still, referring to the progress (or lack thereof), he says, “It’s been slow.” He laughs. “It’s nice to have mystery, but it would be nice to have just a little bit more coming from either the experimental side or the theoretical side.”

Maybe the upcoming deluge of data will help theorists discern how dark energy behaves over changing space and time, which would go a long way toward determining the fate of the universe. Until then, the generation of scientists who set out to write the final chapter in the story of the cosmos will have to content themselves with a more modest conclusion: to be continued.